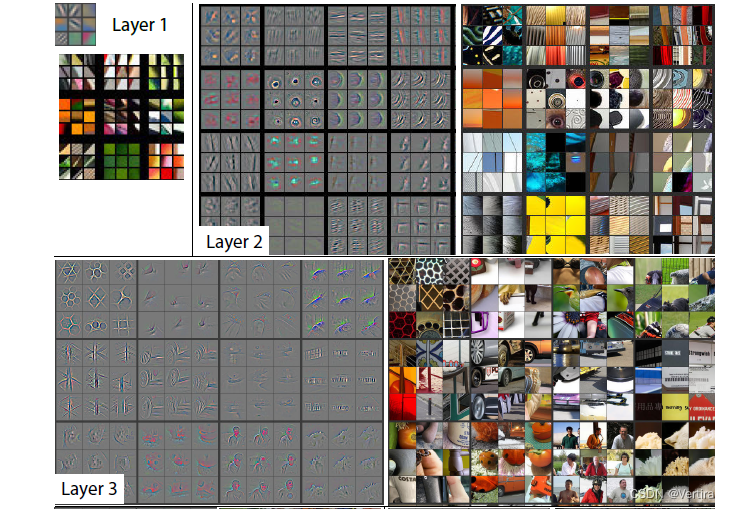

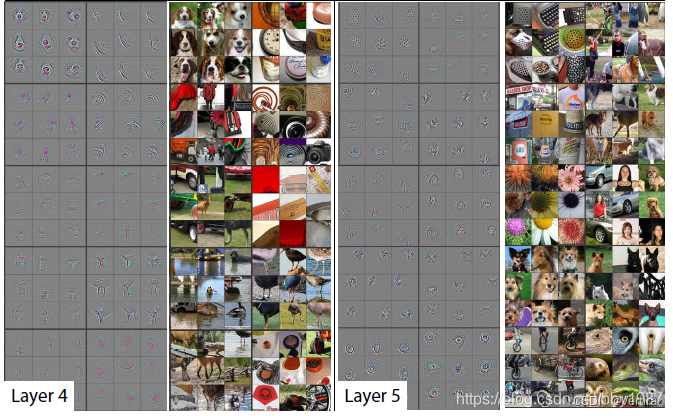

2013年Zeiler和Fergus发表的《Visualizing and Understanding Convolutional Networks》

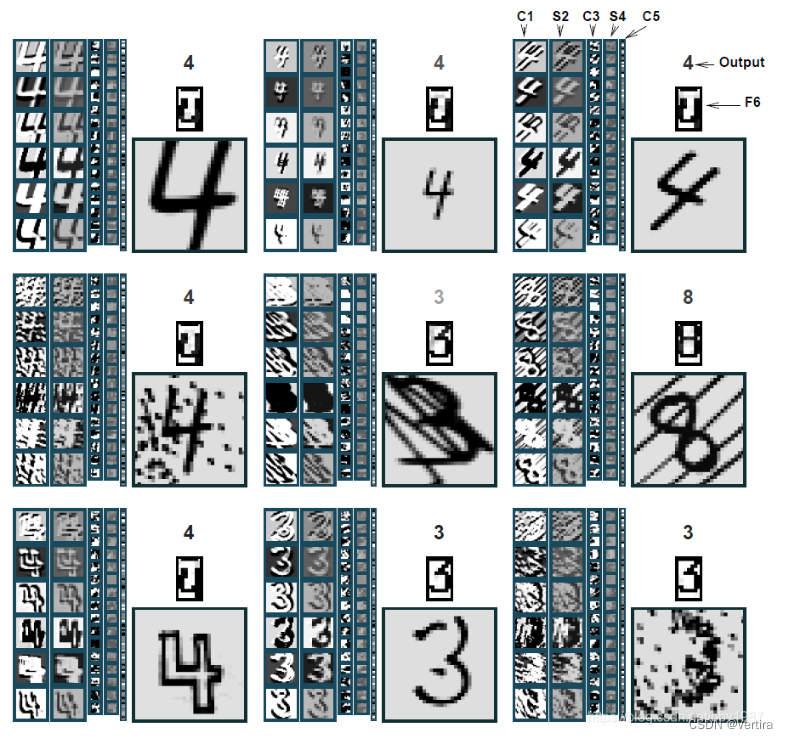

早期LeCun 1998年的文章《Gradient-Based Learning Applied to Document Recognition》中的一张图也非常精彩,个人觉得比Zeiler 2013年的文章更能给人以启发。从下图的F6特征,我们可以清楚地看到原始书写差异非常大的图片如何在深层特征中体现出其不变性。

pytorch 有专门的接口提取特征图

拿到特征图的方法有多种,有人可以从输入开始,一个一个算子地让网络做前向运算,直到想要的特征图处将其返回,这种方法尽管也可行,但略有些麻烦。实际上PyTorch给了一个专用接口可以在前向过程中获取到特征图,这个接口是torch.nn.Module.register_forward_hook。当我们拿到特征图后,PyTorch又有专门的画图和保存图片的接口:torchvision.utils.make_grid和torchvision.utils.save_image,非常方便。

展示代码,复制粘贴就可以使用

# -*- coding: utf-8 -*-

import os

import shutil

import time

import torch

import torch.nn as nn

from torchvision import transforms

from torchvision import datasets

import torchvision.utils as vutil

from torch.utils.data import DataLoader

import torchsummary

DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu")

EPOCH = 1

LR = 0.001

TRAIN_BATCH_SIZE = 64

TEST_BATCH_SIZE = 32

BASE_CHANNEL = 32

INPUT_CHANNEL = 1

INPUT_SIZE = 28

MODEL_FOLDER = './save_model'

IMAGE_FOLDER = './save_image'

INSTANCE_FOLDER = None

class Model(nn.Module):

def __init__(self, input_ch, num_classes, base_ch):

super(Model, self).__init__()

self.num_classes = num_classes

self.base_ch = base_ch

self.feature_length = base_ch * 4

self.net = nn.Sequential(

nn.Conv2d(input_ch, base_ch, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(base_ch, base_ch * 2, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(base_ch * 2, self.feature_length, kernel_size=3,

padding=1),

nn.ReLU(),

nn.AdaptiveAvgPool2d(output_size=(1, 1))

)

self.fc = nn.Linear(in_features=self.feature_length,

out_features=num_classes)

def forward(self, input):

output = self.net(input)

output = output.view(-1, self.feature_length)

output = self.fc(output)

return output

def load_dataset():

train_dataset = datasets.MNIST(root='./data',

train=True,

transform=transforms.ToTensor(),

download=True)

test_dataset = datasets.MNIST(root='./data',

train=False,

transform=transforms.ToTensor(),

download=True)

return train_dataset, test_dataset

def hook_func(module, input, output):

"""

Hook function of register_forward_hook

Parameters:

-----------

module: module of neural network

input: input of module

output: output of module

"""

image_name = get_image_name_for_hook(module)

data = output.clone().detach()

data = data.permute(1, 0, 2, 3)

vutil.save_image(data, image_name, pad_value=0.5)

def get_image_name_for_hook(module):

"""

Generate image filename for hook function

Parameters:

-----------

module: module of neural network

"""

os.makedirs(INSTANCE_FOLDER, exist_ok=True)

base_name = str(module).split('(')[0]

index = 0

image_name = '.' # '.' is surely exist, to make first loop condition True

while os.path.exists(image_name):

index += 1

image_name = os.path.join(

INSTANCE_FOLDER, '%s_%d.png' % (base_name, index))

return image_name

if __name__ == '__main__':

time_beg = time.time()

train_dataset, test_dataset = load_dataset()

train_loader = DataLoader(dataset=train_dataset,

batch_size=TRAIN_BATCH_SIZE,

shuffle=True)

test_loader = DataLoader(dataset=test_dataset,

batch_size=TEST_BATCH_SIZE,

shuffle=False)

model = Model(input_ch=1, num_classes=10, base_ch=BASE_CHANNEL).cuda()

torchsummary.summary(

model, input_size=(INPUT_CHANNEL, INPUT_SIZE, INPUT_SIZE))

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=LR)

train_loss = []

for ep in range(EPOCH):

# ----------------- train -----------------

model.train()

time_beg_epoch = time.time()

loss_recorder = []

for data, classes in train_loader:

data, classes = data.cuda(), classes.cuda()

optimizer.zero_grad()

output = model(data)

loss = criterion(output, classes)

loss.backward()

optimizer.step()

loss_recorder.append(loss.item())

time_cost = time.time() - time_beg_epoch

print('\rEpoch: %d, Loss: %0.4f, Time cost (s): %0.2f' % (

ep, loss_recorder[-1], time_cost), end='')

# print train info after one epoch

train_loss.append(loss_recorder)

mean_loss_epoch = torch.mean(torch.Tensor(loss_recorder))

time_cost_epoch = time.time() - time_beg_epoch

print('\rEpoch: %d, Mean loss: %0.4f, Epoch time cost (s): %0.2f' % (

ep, mean_loss_epoch.item(), time_cost_epoch), end='')

# save model

os.makedirs(MODEL_FOLDER, exist_ok=True)

model_filename = os.path.join(MODEL_FOLDER, 'epoch_%d.pth' % ep)

torch.save(model.state_dict(), model_filename)

# ----------------- test -----------------

model.eval()

correct = 0

total = 0

for data, classes in test_loader:

data, classes = data.cuda(), classes.cuda()

output = model(data)

_, predicted = torch.max(output.data, 1)

total += classes.size(0)

correct += (predicted == classes).sum().item()

print(', Test accuracy: %0.4f' % (correct / total))

print('Total time cost: ', time.time() - time_beg)

# ----------------- visualization -----------------

# clear output folder

if os.path.exists(IMAGE_FOLDER):

shutil.rmtree(IMAGE_FOLDER)

model.eval()

modules_for_plot = (torch.nn.ReLU, torch.nn.Conv2d,

torch.nn.MaxPool2d, torch.nn.AdaptiveAvgPool2d)

for name, module in model.named_modules():

if isinstance(module, modules_for_plot):

module.register_forward_hook(hook_func)

test_loader = DataLoader(dataset=test_dataset,

batch_size=1,

shuffle=False)

index = 1

for data, classes in test_loader:

INSTANCE_FOLDER = os.path.join(

IMAGE_FOLDER, '%d-%d' % (index, classes.item()))

data, classes = data.cuda(), classes.cuda()

outputs = model(data)

index += 1

if index > 20:

break

运行结果;

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 32, 28, 28] 320

ReLU-2 [-1, 32, 28, 28] 0

MaxPool2d-3 [-1, 32, 14, 14] 0

Conv2d-4 [-1, 64, 14, 14] 18,496

ReLU-5 [-1, 64, 14, 14] 0

MaxPool2d-6 [-1, 64, 7, 7] 0

Conv2d-7 [-1, 128, 7, 7] 73,856

ReLU-8 [-1, 128, 7, 7] 0

AdaptiveAvgPool2d-9 [-1, 128, 1, 1] 0

Linear-10 [-1, 10] 1,290

================================================================

Total params: 93,962

Trainable params: 93,962

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.74

Params size (MB): 0.36

Estimated Total Size (MB): 1.10

----------------------------------------------------------------

Epoch: 0, Mean loss: 0.6502, Epoch time cost (s): 5.53, Test accuracy: 0.9360

Total time cost: 13.36158013343811

Process finished with exit code 0特征图被保存在当前文件的文件夹下:

MODEL_FOLDER = './save_model'

IMAGE_FOLDER = './save_image'这两个文件夹要提前创建好

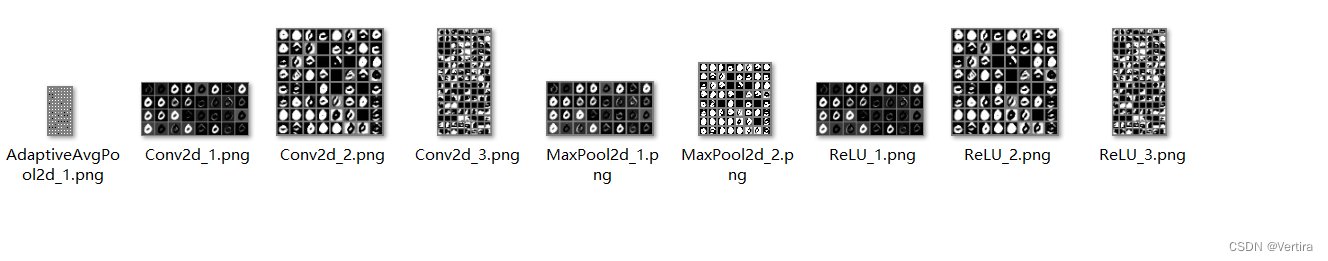

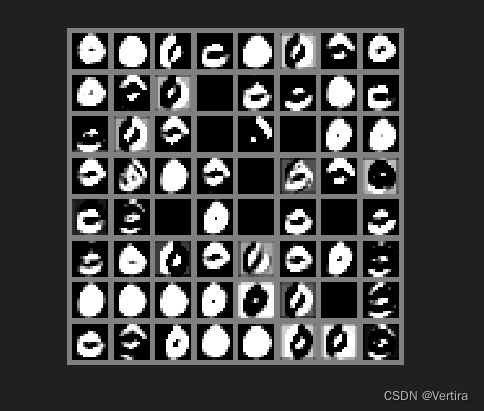

展示一下 在我电脑上运行的结果

打开一个文件夹 看一下

展示上面的一张图

代码中的重要的内容在visualization部分:

- test_loader的batch_size设置为1。

- 下面一段代码用来设置register_forward_hook,只要我们在跑前向之前将register_forward_hook设置好,那么在它就会在前向的时候被调用。

modules_for_plot = (torch.nn.ReLU, torch.nn.Conv2d,

torch.nn.MaxPool2d, torch.nn.AdaptiveAvgPool2d)

for name, module in model.named_modules():

if isinstance(module, modules_for_plot):

module.register_forward_hook(hook_func)

3.hook_func(module, input, output)需要自己实现。该函数的参数是固定的,不能自己随意加减,这就带来了一个比较麻烦的事情,我们没法把要保存的文件名等信息作为参数传给hook_func,所以这里我使用了一种比较粗暴的方式。文件名的前缀是module的类型名,后面会跟一个序号,序号是同类module在网络中出现的顺序。然而这个序号也没法传给hook_func,所以我直接从硬盘上从序号1开始,通过判断文件的存在性来决定下一个序号,由get_image_name_for_hook函数实现。比较粗暴和无奈的一种方法,如果哪位老兄有更好的方式欢迎留言指教。

4. hook_func中,在保存图片之前,需要将input或output的维度从[1, C, H, W]调整为[C, 1, H, W]

save_image()每一行的特征图数量默认是8,可以通过nrow参数进行修改。pad_value是特征图中间填充的间隙的颜色,尽管PyTorch将其定义为整数,但是由于Tensor一般是float型,所以还是输入个[0, 1]之间的浮点数才能真正生效。

感谢 点赞 收藏 + 关注