豆瓣案例

一、scrapy安装

pip install scrapy

出错提示to update pip,请升级pip

python -m pip install --upgrade pip

如果下载过慢也可以先修改镜像,再下载

pip config set global.index-url https://pypi.doubanio.com/simple修改镜像

二、scrapy的基本使用(爬虫项目创建->爬虫文件创建->运行 + 爬虫项目结构 + response的属性和方法🌟)

1、创建项目

pycharm命令行终端中:

scrapy startproject 项目名 如:

scrapy startproject spider2024

2、创建爬虫文件

scrapy genspider 爬虫文件名 要爬取的网页 如:

scrapy genspider douban https://movie.douban.com/

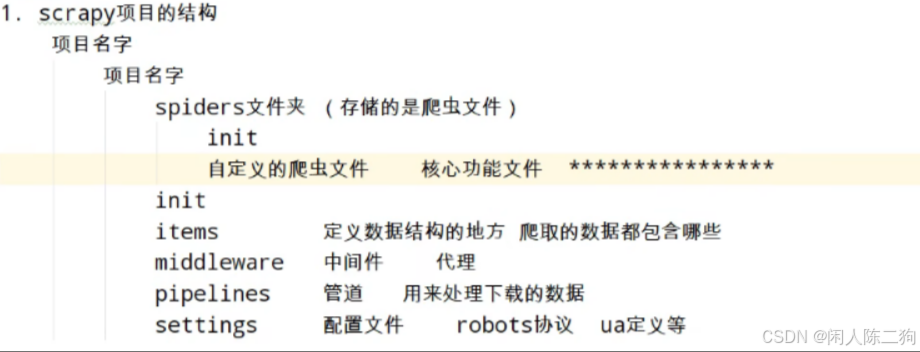

3、scrapy项目的结构

4、运行爬虫文件

scrapy crawl 爬虫文件名

如:scrapy crawl douban

注释:在settings.py文件中,注释掉ROBOTSTXT_OBEY = True,才能爬取拥有反爬协议的网页

5、response的属性和方法(爬虫的处理主要是对response进行操作,从这里开始主要对生成的爬虫文件进行操作)

#该方法中response相当于response = request.get()

response.text () 用于获取响应的内容

response.body () 用于获取响应的二进制数据

response.xpath() 可以直接使用xpath方法来解析response中的内容

response.xpath().extract() 提取全部seletor对象的data属性值,返回字符串列表

response.xpath().extract_first() 提取seletor列表的第一个数据,返回字符串

豆瓣案例:

- douban.py

import scrapy

#from typing import Iterable

#from scrapy import Request

from scrapy.http import HtmlResponse

from..items import MovieItem

class DoubanSpider(scrapy.Spider):

name = "douban"

allowed_domains = ["movie.douban.com"]

def start_requests(self):

for page in range(10):

yield scrapy.Request(url=f'https://movie.douban.com/top250?start={

page*25}&filter=')

def parse(self, response:HtmlResponse):

list_tiems= response.css('#content > div > div.article > ol > li')

for list_item in list_tiems:

movie_item = MovieItem()

movie_item['title'] = list_item.css('span.title::text').extract_first()

movie_item['rank']= list_item.css('span.rating_num::text').extract_first()

movie_item['subject'] = list_item.css('span.inq::text').extract_first()

yield movie_item

#

# 获取分页器的链接,再利用scrapy.Request来发送请求并进行后续的处理。但会有个bug

# href_list = response.css('div.paginator > a::attr(href)')

# for href in href_list:

# urls = response.urljoin(href.extract())

# yield scrapy.Request(url=urls)

- item.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

#爬虫获取到的数据需要组装成Item对象

class MovieItem(scrapy.Item):

title = scrapy.Field()

rank = scrapy.Field()

subject = scrapy.Field()

- settings.py

修改请求头:

USER_AGENT = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.1.6221.95 Safari/537.36"

随机延时:

DOWNLOAD_DELAY = 3

RANDOMIZE_DOWNLOAD_DELAY=True

保存文件设置:

ITEM_PIPELINES = {

"spider2024.pipelines.Spider2024Pipeline": 300,#数字小的先执行

}

4.pipeline.py

import openpyxl

class Spider2024Pipeline:

def __init__(self):

self.wb = openpyxl.workbook()

self.ws = self.wb.active

self.ws.title='Top250'

self.ws.append(('标题','评分','主题'))

def close_spider(self, spider):

self.wb.save('电影数据.xlsx')

def process_item(self, item, spider):

# self.ws.append((item['title'], item['rank'],item['subject']))

# 这样获取数据,如果数据为空会报错;这里建议用get获取

title=item.get('title','')#给个默认空值

rank = item.get('rank', '') # 给个默认空值

subject = item.get('subject', '') # 给个默认空值

self.ws.append((title, rank, subject))

return item